In this project, a method of using neural networks for setting the hearing threshold of a person is proposed.

Abstract

In this project, a method of using neural networks for setting the hearing threshold of a person is proposed. The setting of the hearing threshold is done in means of pattern recognition of EEG signals. Three preprocessing methods for dimensions’ reduction of the input data were tested and compared: Karhunen-Loeve transform, AutoRegressive model and a neural network for dimensions’ reduction.

The neural network that was tested has two hidden layers and yielded a 86% of success for classifying signal with SNR = 1. The best results were achieved using the Karhunen-Loeve transform, and averaging the network outputs.

Introduction

The Hearing Threshold of a person is defined as the lowest sound stimulus that he can still hear. Nowadays, this process is done manually by experts that analyze the EEG measured after the person is exposed to a sound stimulus. The EEG measured is compared with a target known EEG form.

This process can be redefined to a problem of Pattern Recognition. Neural Networks have been used successfully in the last years for solving problems of pattern recognition. In this project, we proposed to use Neural Networks for resolving the Hearing Threshold of a person.

Two principal problems that difficult this new method are:

1. The EEG signal is represented by 512 samples that will leads to an enormous network of 512 inputs. Therefore, the input signal should be represented by a smaller one; dimension’s reduction.

2. The signal-to-noise ratio (SNR) of a measured EEG is very small, in the order of 1/100.

In this project we compare between three methods for dimension’s reduction:

1. AutoRegressive model.

2. Karhunen-Loeve Transformation.

3. Using a specific Neural Network for dimension’s reduction.

The Algorithm

1. The consecutive measured EEG segments are averaged for increasing the SNR

2. The input is preprocessed for dimensions’ reduction.

3. The processed input is tested in the already learned Neural Network.

4. The output of the network is post processed by averaging it.

Neural Network

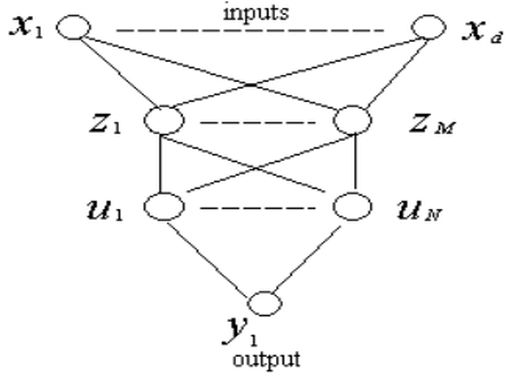

In this project we used a feedforward neural network with one or two hidden layers and one output.

For overcoming the overfitting problem we used the cross validation method in the learning process. This method consist in checking the network, after each training epoch, with another EEG group, the so called validation group.

The network that best classified the validation group is stored and then used.

Results

We used 700 artificial EEG segments with colored noise and SNR = 1 for training the

network and 700 segments for the validation group.

The trained network was tested with other 1400 segments with colored noise and SNR = 1.

The results are shown in the next table.

We used 700 artificial EEG segments with colored noise and SNR = 1 for training the

network and 700 segments for the validation group.

The trained network was tested with other 1400 segments with colored noise and SNR = 1.

The results are shown in the next table.

|

PreProcessing Method |

PreProcessing Parameter |

The

best network topology |

Success

percentage without postprocessing |

Success

percentage with postprocessing |

| KL | KL order = 7 |

H1 = 20H2 = 10 |

82% | 93% |

| Neural Network |

M=7 | H1 = 20H2 = 1 |

63% | 64% |

The AR results are omitted because they were unsatisfactory (below 50%)

Conclusions

The conclusions that can be drawn for this work are:

1. Using neural networks for the Hearing Threshold problem yields very good results, above 90% of success for signals with SNR = 1.

2. The crucial importance of finding the best way for the dimensions’ reduction. This method should recognize the most important features of the input signals. In this article, the KL method, among the three, yields the best results.

Acknowledgements

We would like to thank our supervisor, Pavel Kissilev, for his patience and for guiding us through the project and the Ollendorff Minerva Center Fund for their support.